Text Recognition in iOS with Tesseract OCR

OCR is an old technology. I remember from my early days with my own computer that I bought a handheld scanner that featured OCR capabilities, and I experimented with handwritten notes to see if it could recognise my hideous scribbling.

As far as I recall, it wasn't that great.

I'm currently building a tiny app for taking notes within podcasts, only using screenshots of the lock screen. I primarily listen to podcasts in my car on my commute, so I can't grab my phone and open Evernote to jot down what was said.

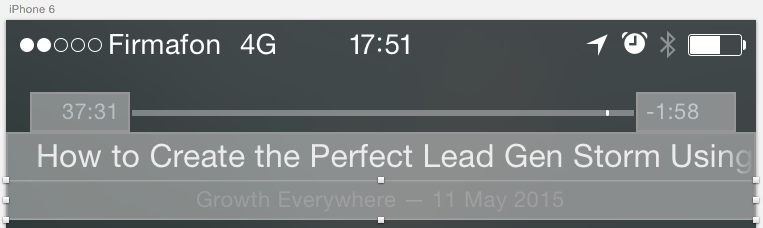

But it's easy enough to take a screenshot of the lock screen, which I often do:

The problem is, that I often forget and I end up deleting the photos weeks later.

Text recognition

As you can see on the lock screen photo above, there's a lot of information present there already:

- Podcast name

- Episode title

- Episode date

- Elapsed time

- Remaining time

You just need to parse the photo into text bites using OCR.

Enter Tesseract

Tesseract is an open source OCR engine sponsored by Google since 2006. It was originally developed by HP between 1985 and 1994.

And luckily, installing Tesseract on iOS is very simple. It has support for cocoapods via Tesseract-OCR-iOS on Github leaving it up to you to download the language pack you desire.

When you've installed the pod and added language files, it is quite simple to begin recognising text - example below in Swift.

self.tesseract.image = UIImage(data: imageData)!

self.tesseract.rect = CGRectMake(24, 72, 100, 40)

self.tesseract.recognize()

let elapsed = self.tesseract.recognizedText

self.tesseract.rect = CGRectMake(630, 72, 100, 40)

self.tesseract.recognize()

let remaining = self.tesseract.recognizedText

self.tesseract.rect = CGRectMake(0, 112, 750, 50)

self.tesseract.recognize()

let title = self.tesseract.recognizedText

self.tesseract.rect = CGRectMake(0, 160, 750, 40)

self.tesseract.recognize()

let name = self.tesseract.recognizedText

let alertVC = UIAlertController(title: "Tesseract", message: "Podcast: \(name)\nEpisode: \(title)\nElapsed: \(elapsed)\nRemaining: \(remaining)", preferredStyle: .Alert)

let cancelAction = UIAlertAction(title: "OK", style: .Cancel, handler: nil)

alertVC.addAction(cancelAction)

self.presentViewController(alertVC, animated: true, completion: nil)

imageData is in NSData representation of an image. I use Photos.framework to query all images on the device and filter the size of the image against the exact device screen size.

The rects that I change all the time is the regions of the image where the desired information is located, as per the masks applied to the screenshot here:

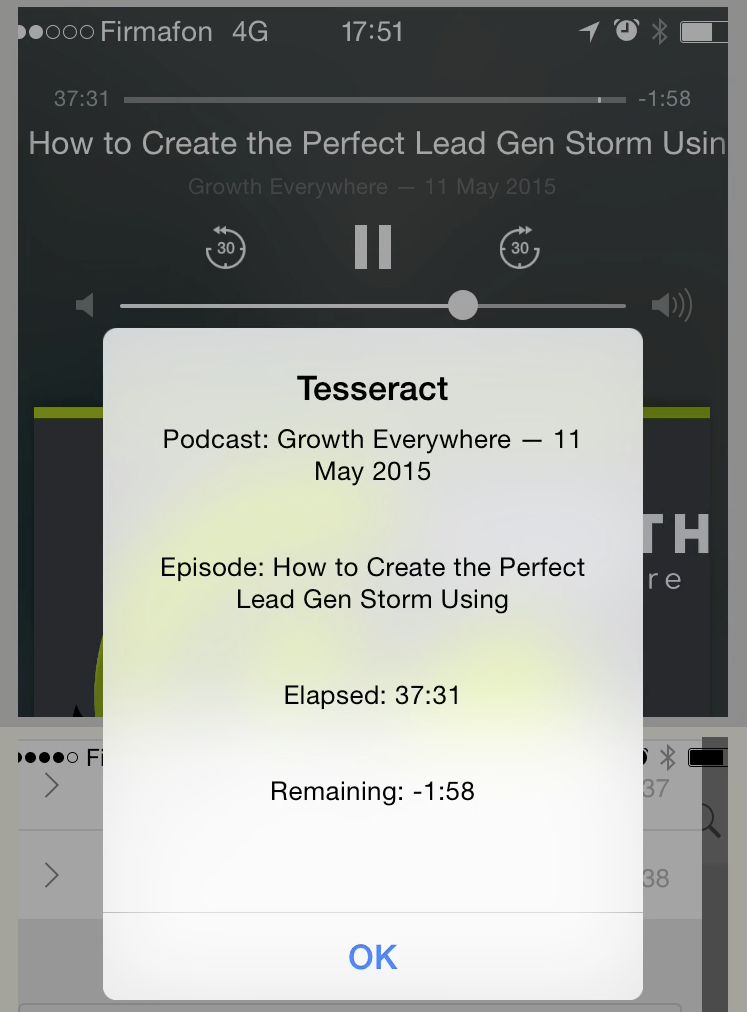

The result of the text recognition above couldn't be more accurate:

Want an invite via Testflight?

I still have some way to go on this before submitting to the App Store, but I'd love to get some feedback. If you'd like to test the app, sign up here and I'll e-mail you the details when it's ready for testing.